Should you comply with this weblog, likelihood is that you’ve got seen and even perhaps walked via my information on constructing an setting for evaluation. That article is from 5 years in the past and I nonetheless get questions and suggestions on it to this present day. To be clear, I nonetheless suppose it is a completely legitimate strategy to construct an setting and to this present day I nonetheless primarily use the Docker setup outlined within the information. Nonetheless, I discover myself beginning to gravitate increasingly in direction of a non-Docker setting.

Docker is nice and I nonetheless use it for a lot of issues, however currently I’ve discovered that it eats up quite a lot of assets on my native machine so I do not at all times have it operating. The bottom Docker picture I shared within the earlier article continues to be printed and accessible for anybody to make use of, but it surely has been more and more difficult to take care of and preserve up-to-date by way of automation. You possibly can nonetheless use that picture and it nonetheless works nice in my expertise, however not too long ago gained appreciation for a extra light-weight method.

These are the instruments used on this method:

VS Code

Jupyter

Python (with digital environments)

The CFBD and CBBD Python packages

Should you’ve by no means used VS Code earlier than as an IDE, you need to be checking it out. It is lengthy been my IDE of alternative for every thing else and it offers a unbelievable expertise for working with Jupyter notebooks. What has put it excessive for me and precipitated my to make use of it increasingly for information analytics process is GitHub Copilot. GitHub Copilot has turn into one thing that I’m not in a position to dwell with out. Chances are you’ll be conversant in my latest rewrite of the CFBD API, web site, and most related infrastructure. You might also be conversant in my latest foray into basketball with CollegeBasketballData.com. I would not have been in a position to do any of this with out Copilot. It is most likely at the very least halved my growth time on the above. And it really works seamlessly with Jupyter notebooks in VS Code.

Simply as with the earlier information, this information ought to work whether or not you might be on Home windows, Mac, or Linux. I’m a Home windows consumer and nonetheless extremely advocate establishing Home windows Subsystem for Linux (WSL) along with your favourite Linux taste (I exploit Ubuntu) in case you are additionally in Home windows. I do all my growth (private {and professional}) completely in WSL.

Stipulations are that you’ve the next put in:

Additionally, you will want some VS Code extensions, on the very least the Python and Jupyter extensions. Right here is the listing of extensions I’m operating for this tutorial:

Open up a terminal window. Let’s create a listing known as jupyter and transfer into that listing.

mkdir jupyter

cd jupyter

Subsequent, we will create a Python digital setting. That is at all times a superb follow as means that you can work with totally different Python variations and package deal variations throughout totally different folders/repos.

python -m venv ./venv

This could have created a venv folder with the Python binaries and a few scripts. We’re going to activate the digital setting we simply created by operating:

supply ./venv/bin/activate

Be aware that this command could differ for Mac and non-WSL Home windows. Consult with the documentation linked above for directions particular to these OSes.

Subsequent we’ll set up a listing of generally used Python packages. Be happy so as to add any others you might want. We can even write these packages right into a necessities.txt file for simple set up.

pip set up cbbd cfbd ipykernel matplotlib numpy pandas scikit-learn xgboost

pip freeze > necessities.txt

Let’s create an empty Jupyter pocket book and open this listing in VS Code.

contact check.ipynb

code .

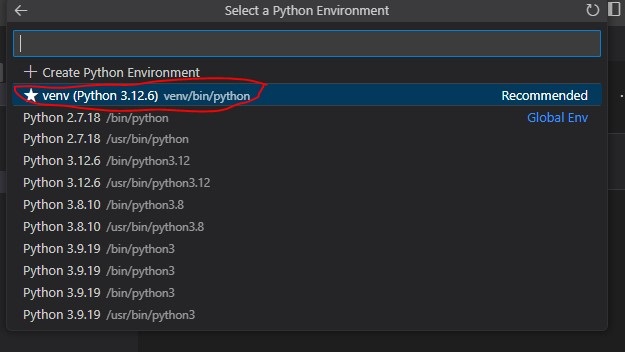

Inside VS Code, open the check.ipynb file from the left sidebar. Then, click on on “Choose Kernel” within the top-right after which “Python Environments…” from the dropdown listing that seems.

Choose the setting labeled venv. There must be a star subsequent to it.

Now we are able to start working within the Jupyter pocket book. Let’s begin by importing the cfbd and pandas packages and operating the code block.

import cfbd

import pandas as pd

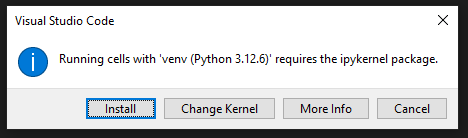

Should you did not set up the ipykernel package deal with the listing of packages above, you might be greeted with the beneath immediate. Simply click on ‘Set up’ and wait.

Subsequent, let’s configure the CFBD package deal with our CFBD API key. Should you shouldn’t have a key, you possibly can purchase one from the web site. Change the textual content beneath along with your private key.

configuration = cfbd.Configuration(

access_token = ‘your_key_here’

)

We will now name the API to seize a listing of video games:

with cfbd.ApiClient(configuration) as api_client:

games_api = cfbd.GamesApi(api_client)

video games = games_api.get_games(yr=2024, classification=’fbs’)

len(video games)

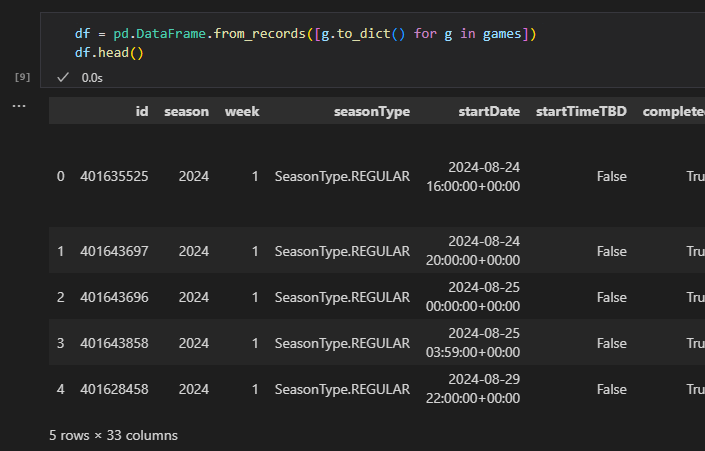

In my instance, there have been 920 video games returned. It is fairly simple to load these right into a Pandas DataFrame.

df = pd.DataFrame.from_records([g.to_dict() for g in games])

df.head()

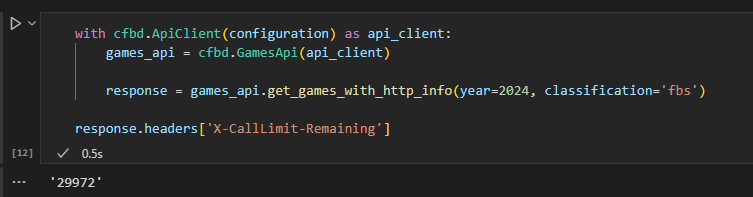

One neat trick utilizing the Python library is that each technique has a particular model that can even embody the HTTP response metadata. Merely connect _with_http_info to the tip of the strategy. You should utilize this to maintain observe of what number of month-to-month calls you’ve got remaining.

with cfbd.ApiClient(configuration) as api_client:

games_api = cfbd.GamesApi(api_client)

response = games_api.get_games_with_http_info(yr=2024, classification=’fbs’)

response.headers[‘X-CallLimit-Remaining’]

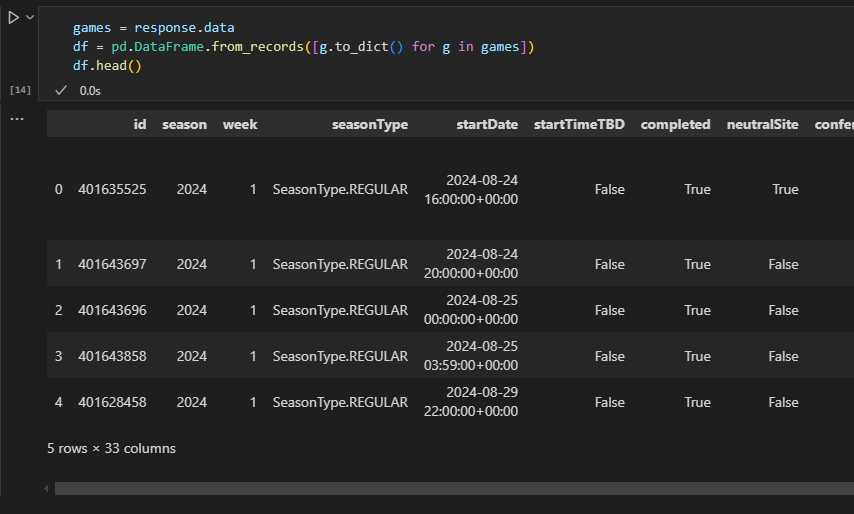

After which entry the identical as earlier than information by way of the response.information area.

video games = response.information

df = pd.DataFrame.from_records([g.to_dict() for g in games])

df.head()

And that’s all there’s to it!

I do nonetheless love Docker for a lot of issues and suppose it’s nonetheless completely sufficient to make use of for a knowledge analytics setting. Nonetheless, you possibly can see how this method is rather more light-weight and means that you can leverage the total capabilities of VS Code. We did not actually dig into the GitHub Copilot extension. Should you did not set up, then I can not advocate it sufficient as it’s a gamechanger.

Another tweaks that folks make embody swapping out pip for conda. Nonetheless, I’ve discovered the above setup to be greater than sufficient. Anyway, joyful coding!